UniView updated: Show age, context-sensitive help, highlighting, etc.

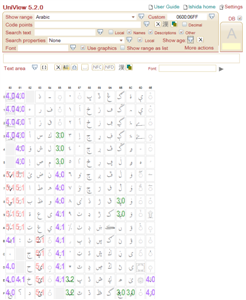

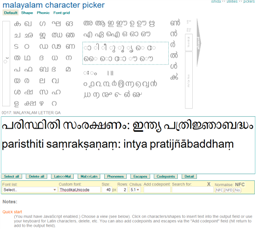

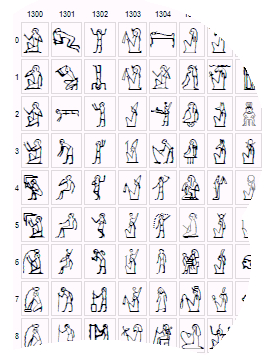

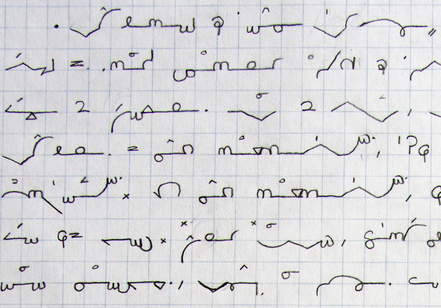

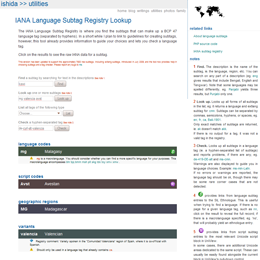

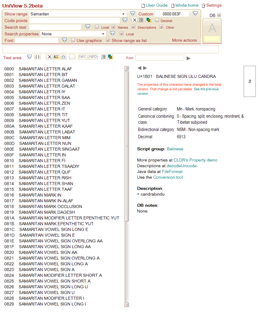

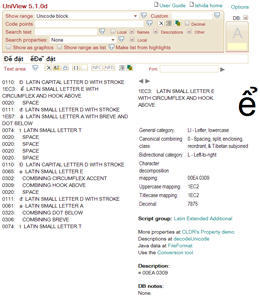

About the tool: Look up and see characters (using graphics or fonts) and property information, view whole character blocks or custom ranges, select characters to paste into your document, paste in and discover unknown characters, search for characters, do hex/dec/ncr conversions, highlight character types, etc. etc. Supports Unicode 5.2 and written with Web Standards to work on a variety of browsers. No need to install anything.

Latest changes: The major change in this update is the addition of a function, Show age, to show the version of Unicode where a character was added (after version 1.1). The same information is also listed in the details given for a character in the lower right panel.

The trigger for context-sensitive help was reduced to the first character of a command name, rather than the whole command name. This improves behaviour for commands under More actions by allowing you to click on the command name rather than just the icon alongside to activate the command.

Some ‘quick start’ instructions were also added to the initial display to orient people new to the tool, and this help text was updated in various areas.

The highlighting mechanism was changed. Rather than highlight characters using a coloured border (which is typically not very visible), highlighting now works by greying out characters that are not highlighted. This also makes it clearer when nothing is highlighted.

In the recent past, when you converted a matrix to a list in the lower left panel, greyed-out rows would be added for non-characters. These are no longer displayed. Consequently, the command to remove such rows from the list (previously under More actions) has been removed.

A lot of invisible work went into replacing style attributes in the code with class names. This produces better source code, but doesn’t affect the user experience.

, rather than in the right-hand properties panel. This is to improve consistency and avoid surprises.

, rather than in the right-hand properties panel. This is to improve consistency and avoid surprises.

May 12th, 2010 at 10:46 am e

Hi Guys,

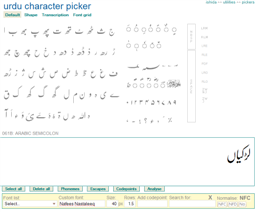

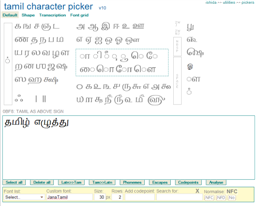

I like your pickers and what is appealing even more is that you’ve added transliteration, which is a very nice feature for any picker.

Paul