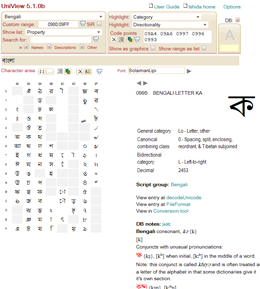

UniView 5.1.0c now available

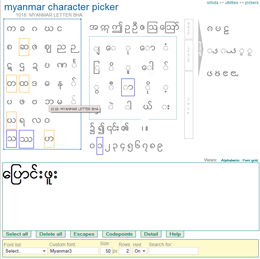

The major changes in this version relate to the way searching and property-based lookup is done on characters in the lower left panel, and features for refining and capturing the resulting lists.

Removed the two Highlight selection boxes. These used to highlight characters in the lower left panel with a specific property value. The Show selection box on the left (used to be Show list) now does that job if you set the Local checkbox alongside it. (Local is the default for this feature.)

As part of that move, the former SiR (search in range) checkbox that used to be alongside Custom range has been moved below the Search for input field, and renamed to Local. If Local is checked, searching can now be done on any content in the lower left panel, and the results are shown as highlighting, rather than a new list.

To complement these new highlighting capabilities, a new feature was added. If you click on the icon next to Make list from highlights the content of the lower left panel will be replaced by a list of just those items that are currently highlighted – whether the highlighting results from a search or a property listing. Note that this can also be useful to refine searches: perform an initial search, convert the result to a list, then perform another search on that list, and so on.

Finally got around to putting  icons after the pull-down lists. This means that if you want to

reapply, say, a block selection after doing something else, only one

click is needed (rather than having to choose another option, then

choose the original option). The effect of this on the ease of use of

UniView is much greater than I expected.

icons after the pull-down lists. This means that if you want to

reapply, say, a block selection after doing something else, only one

click is needed (rather than having to choose another option, then

choose the original option). The effect of this on the ease of use of

UniView is much greater than I expected.

Added an icon  to the text area. If you click on this, all the characters in the lower

left panel are copied into the text area. This is very useful for

capturing the result of a search, or even a whole block. Note that if a

list in the lower left panel contains unassigned code points, these are

not copied to the text area.

to the text area. If you click on this, all the characters in the lower

left panel are copied into the text area. This is very useful for

capturing the result of a search, or even a whole block. Note that if a

list in the lower left panel contains unassigned code points, these are

not copied to the text area.

As a result of the above changes, the way Show as graphics and Show range as list work internally was essential rewritten, but users shouldn’t see the difference.

Changed the label Character area to Text area.