Managing CSS style sheets for LTR and RTL variants of a page

I created a new HTML5-based template for our W3C Internationalization articles recently, and I’ve just received some requests to translate documents into Arabic and Hebrew, so I had to get around to updating the bidi style sheets. (To make it quicker to develop styles, I create the style sheet for ltr pages first, and only when that is working well do I create the rtl style sheet info.)

Here are some thoughts about how to deal with style sheets for both right-to-left (rtl) and left-to-right (ltr) documents.

What needs changing?

Converting a style sheet is a little more involved than using a global search and replace to convert left to right, and vice versa. While this may catch many of the things that need changing, it won’t catch all, and it could also introduce errors into the style sheet.

For example, I had selectors called .topleft and .bottomright in my style sheet. These, of course, shouldn’t be changed. There may also be occasional situations where you don’t want to change the direction of a particular block.

Another thing to look out for: I tend to use -left and -right a lot

when setting things like margins, but where I have set something like margin: 1em 32% .5em 7.5%;

you can’t just use search and replace, and you have to carefully scour

the whole of the main stylesheet to find the instances where the right

and left margins are not balanced.

There is a web service called CSSJanus

that can apply a little intelligence to convert most of what you need.

You still have to use with care, but it does come with a convention to

prevent conversion of properties where needed (you can disable CSSJanus

from running on an entire class or any rule within a class by prepending

a /* @noflip */ comment before the rule(s) you want CSSJanus to ignore).

Note also that there are other things that may need changing besides the right and left values. For example, some of the graphics on our template need to be flipped (such as the dog-ear icon in the top corner of the page).

CSS may provide a way to do this in the future, but it is still only a

proposal in a First Public Working Draft at the moment. (It would

involve writing a selector such as #site-navigation:dir(rtl) { background-image: url(standards-corner-rtl.png); }.

Approach 1: extracting changed properties to an auxiliary style sheet

For the old template I have a secondary, bidi style sheet that I load after the main style sheet. This bidi style sheet contains a copy of just the rules in the main style sheet that needed changing and overwrites the styles in the main style sheet. These changes were mainly to margin, padding, and text-align properties, though there were also some others, such as positioning, background and border properties.

The cons of this approach were:

- it’s a pain to create and maintain a second style sheet in the first place

- it’s an even bigger pain to remember to copy any relevant changes in the main style sheet to the bidi style sheet, not least because the structure is different, and it’s a little harder to locate things

- everywhere that the main style sheet declared, say, a left margin without declaring a value for the right margin, you have to figure out what that other margin should be and add it to the bidi style sheet. For example, if a figure has just margin-left: 32%, that will be converted to margin-right: 32%, but because the bidi style sheet hasn’t overwritten the main style sheet’s margin-left value, the Arabic page will end up with both margins set to 32%, and a much thinner figure than desired. To prevent this, you need to figure out what all those missing values should be, which is typically not straightforward, and add them explicitly to the bidi style sheet.

- downloading a second style sheet and overwriting styles leads to higher bandwidth consumption and more processing work for the rtl pages.

Approach 2: copying the whole style sheet and making changes

This is the approach that I’m trying for the moment. Rather than painstakingly picking out just the lines that changed, I take a copy of the whole main style sheet, and load that with the article instead of the main style sheet. Of course, I still have to change all the lefts to rights, and vice versa, and change all the graphics, etc. But I don’t need to add additional rules in places where I previously only specified one side margin, padding, etc.

We’ll see how it works out. Of course, the big problem here is that any change I make to the main style sheet has to be copied to the bidi style sheet, whether it is related to direction or not. Editing in two places is definitely going to be a pain, and breaks the big advantage that style sheets usually give you of applying changes with a single edit. Hopefully, if I’m careful, CSSJanus will ease that pain a little.

Another significant advantage should be that the page loads faster, because you don’t have to download two style sheets and overwrite a good proportion of the main style sheet to display the page.

And finally, as long as I format things exactly the same way, by running a diff program I may be able to spot where I forgot to change things in a way that’s not possible with approach 1.

Approach 3: using :lang and a single file

On the face of it, this seems like a better approach. Basically you

have a single style sheet, but when you have a pair of rules such as p { margin-right: 32%; margin-left: 7.5%;} you add another line that says p:lang(ar) { margin-left: 32%; margin-right: 7.5%; }.

For small style sheets, this would probably work fine, but in my case I see some cons with this approach, which is why I didn’t take it:

- there are so many places where these extra lines need to be added

that it will make the style sheet much harder to read, and this is made

worse because the

p:lang(ar)in the example above would actually need to bep:lang(ar), p:lang(he), p:lang(ur), p:lang(fa), p:lang(dv) ..., which is getting very messy, but also significantly pumps up the bandwidth and processing requirements compared with approach 2 (and not only for rtl docs). - you still have to add all those missing values we talked about in approach 1 that were not declared in the part of the style sheet dealing with ltr scripts

- the list of languages could be long, since there is no way to say “make this rule work for any language with a predominantly rtl script”, and obscures those rules that really are language specific, such as for font settings, that I’d like to be able to find quickly when maintaining the style sheet

- you really need to use the :lang() selector for this, and although it works on all recent versions of major browsers, it doesn’t work on, for example, IE6

Having said that, I may use this approach for the few things that CSSJanus can’t convert, such as flipping images. That will hopefully mean that I can produce the alternative stylesheet in approach 2 just by running through CSSJanus. (We’ll see if I’m right in the long run, but so far so good…)

Approach 4: what I’d really like to do

The cleanest way to reduce most of these problems would be to add some additional properties or values so that if you wanted to you could replace

p { margin-right: 32%; margin-left: 7.5%; text-align: left; }

with

p { margin-start: 32%; margin-end: 7.5%; text-align: start; }

Where start refers to the left for ltr documents and right for rtl docs. (And end is the converse.)

This would mean that that one rule would work for both ltr and rtl pages and I wouldn’t have to worry about most of the above.

The new properties have been strongly recommended to the CSS WG several times over recent years, but have been blocked mainly by people who fear that a proliferation of properties or values is confusing to users. There may be some issues to resolve with regards to the cascade, but I’ve never really understood why it’s so hard to use start and end. Nor have I met any users of RTL scripts (or vertical scripts, for that matter) who find using start and end more confusing than using right and left – in fact, on the contrary, the ones I have talked with are actively pushing for the introduction of start and end to make their life easier. But it seems we are currently still at an impasse.

text-align

Similarly, a start and end value for text-align would be very useful. In fact, such a value is in the CSS3 Text module and is already recognised by latest versions of Firefox, Safari and Chrome, but unfortunately not IE8 or Opera, so I can’t really use it yet.

In my style sheet, due to some bad design on my part, what I actually needed most of the time was a value that says “turn off justify and apply the current default” – ie. align the text to left or right depending on the current direction of the text. Unfortunately, I think that we have to wait for full support of the start and end values to do that. Applying text-align:left to unjustify, say, p elements in a particular context causes problems if some of those p elements are rtl and others ltr. This is because, unlike mirroring margins or padding, text-align is more closely associated with the text itself than with page geometry. (I resolved this by reworking the style sheet so that I don’t need to unjustify elements, but I ought to follow my own advice more in future, and avoid using text-align unless absolutely necessary.)

or this

or this  .

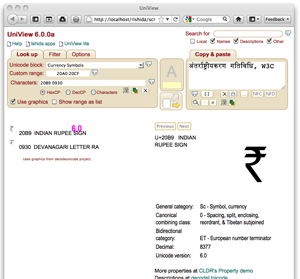

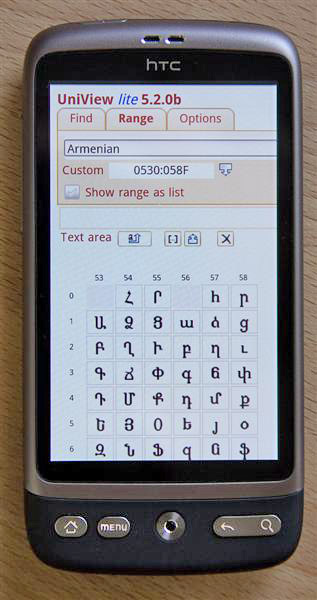

. was fixed, but also extended to handle multiple characters at a time,

opening a separate window for each character. (UniView warns you if you

try to open more than 5 windows.)

was fixed, but also extended to handle multiple characters at a time,

opening a separate window for each character. (UniView warns you if you

try to open more than 5 windows.) was fixed and now puts the character content of the field in the green

box of the Converter Tool. If you need to convert hex or decimal code

point values, do that in the converter.

was fixed and now puts the character content of the field in the green

box of the Converter Tool. If you need to convert hex or decimal code

point values, do that in the converter.

icon. When the background is white (default) details are shown for the character. When the background is orange

icon. When the background is white (default) details are shown for the character. When the background is orange  the character will be added to the text area (like a character map or picker).

the character will be added to the text area (like a character map or picker).

October 11th, 2011 at 1:21 am e

Approach 4 is the most logical (no pun intended) way. It would also allowed for better styling with both LTR and RTL content together. Also *-start and *-end makes more sense in vertical writing-mode.

October 11th, 2011 at 7:56 am e

We went for a similar approach to 3 in the past: instead of using the lang pseudo-class we added an .rtl class to the body serverside. We also had presentational class names like .left and .right (for floats) and .tar and .tal (for text-align). Not ideal but it sort of worked. I agree that just having -start and -end would be very useful.

Another variation could be achieved by using a CSS preprocessor like LESS or SASS. The output would be the same as approach 3, but authoring and maintenance could be simpler?

October 11th, 2011 at 12:49 pm e

I was planning to use ‘start’ and ‘end’ values for my CSS template, but I noticed that it still not supported in Opera.

So I had to use ‘:lang’ with ALL ltr languages:

http://beta.richstyle.org/demo-web-ar.php

Also, the W3C CSS validator returned this warning:

“value start only applies to XSL”!

October 11th, 2011 at 6:00 pm e

When you can change the HTML code alongside the CSS, the more practical way is to set specific classes for each language direction, say for instance:

#header-ltr {

background:url(images/bg_top-ltr.jpg) no-repeat;

}

#header-rtl {

background:url(images/bg_top-rtl.jpg) no-repeat;

}

You end up with quite a big bidi style sheet, but with some compression the total weight of the file is not much bigger than for only one language direction.

Of course, it is way easier to start your project with language direction in mind than to change it afterwards.

November 7th, 2011 at 11:08 pm e

I personally use something similar to Approach 3, but depending on *dir* instead of *lang*. That way you only need to define one style per text direction. E.g.:

[dir=rtl] th {

text-align: right;

}

[dir=ltr] th {

text-align: left;

}

The only con with that is that [dir] makes that declaration have a higher specificity.